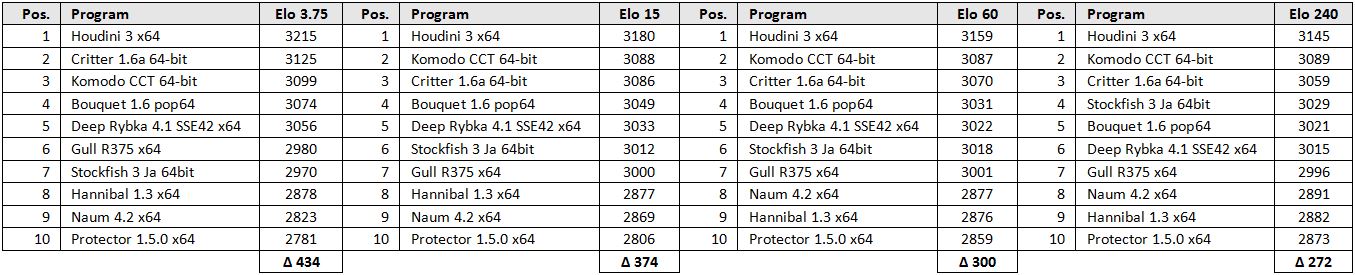

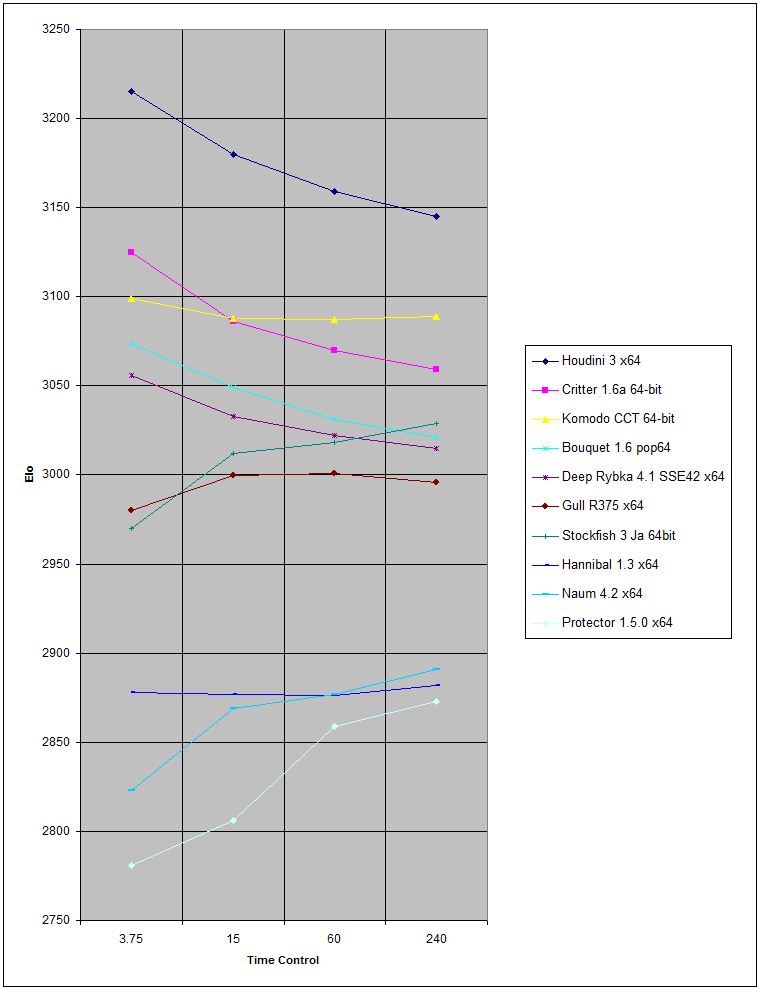

Here are the results (Time controls: 3.75+0.0375, 15.00+0.15, 60.00+0.60 and 240.00+2.40 in seconds) and the corresponding diagram:

The 10 tested engines can be divided into three groups:

The balanced:

Komodo, Gull and Hannibal play at all time controls fairly balanced.

The losers:

Houdini, Rybka, Bouquet and Critter points over the competition especially good in shorter time controls. At longer time controls they lose in this experiment between 41 and 70 Elo.

The winners:

Protector, Naum und Stockfish increase their playing strength at longer time controls.

Stockfish 59 Elo, Naum 68 Elo and Protector even 92 Elo!

Code: Select all

ELOStat Start Elo: 3000

Programm | ELO | ELO | ELO | ELO |

| 3.75 | 15 | 60 | 240 | Diff.

-------------------------+------+------+------+------+------

Houdini 3 x64 | 3215 | 3180 | 3159 | 3145 | -70

Critter 1.6a 64-bit | 3125 | 3086 | 3070 | 3059 | -66

Komodo CCT 64-bit | 3099 | 3088 | 3087 | 3089 | -10

Bouquet 1.6 pop64 | 3074 | 3049 | 3031 | 3021 | -53

Deep Rybka 4.1 SSE42 x64 | 3056 | 3033 | 3022 | 3015 | -41

Gull R375 x64 | 2980 | 3000 | 3001 | 2996 | +16

Stockfish 3 Ja 64bit | 2970 | 3012 | 3018 | 3029 | +59

Hannibal 1.3 x64 | 2878 | 2877 | 2876 | 2882 | + 4

Naum 4.2 x64 | 2823 | 2869 | 2877 | 2891 | +68

Protector 1.5.0 x64 | 2781 | 2806 | 2859 | 2873 | +92

-------------------------+------+------+------+------+-----

Diff. | 434 | 374 | 300 | 272 | Code: Select all

Programm | Diff.|

-------------------------+------+

Houdini 3 x64 | -35 |

Critter 1.6a 64-bit | -27 |

Komodo CCT 64-bit | + 1 |

Bouquet 1.6 pop64 | -28 |

Deep Rybka 4.1 SSE42 x64 | -18 |

Gull R375 x64 | - 4 |

Stockfish 3 Ja 64bit | +17 |

Hannibal 1.3 x64 | + 5 |

Naum 4.2 x64 | +22 |

Protector 1.5.0 x64 | +67 |

-------------------------+------+The end result for all time controls with evaluation (Elo, Bayeselo and Ordo) and individual results are available on my website (Experimental Rating Lists).

http://www.fastgm.de

Regards,

Andreas