It is amazing they handicapped the AI, limiting how fast it could play.

Assume their program can perform as many actions per minute as the number of chess positions per minute Lc0 can calculate

60 * 70000 = 4.2 million actions per minute. They allowed just 250 actions per minute?!

They also restricted the program's ability to multitask. Wow.

deepmind's alphastar beats pros in starcraft ii

Moderators: hgm, Rebel, chrisw

-

Jesse Gersenson

- Posts: 593

- Joined: Sat Aug 20, 2011 9:43 am

-

Werewolf

- Posts: 1795

- Joined: Thu Sep 18, 2008 10:24 pm

Re: deepmind's alphastar beats pros in starcraft ii

I'd love to know the answer to the Probe plan. I was wondering if the idea is one of redundancy: "I'll assume you'll get into my base and kill 5 workers. But it's OK because I built 21 and 21 - 5 = 16 which is the optimal number"

Apart from that I have no clue why AlphaStar did it.

Apart from that I have no clue why AlphaStar did it.

-

Laskos

- Posts: 10948

- Joined: Wed Jul 26, 2006 10:21 pm

- Full name: Kai Laskos

Re: deepmind's alphastar beats pros in starcraft ii

I don't know this game, but it seems not very adequate to check for AI capabilities. Did they have a decent, hardcoded, usual from 1990s AI for this game before AlphaStar? How did it fare at its best unrestrained strength (they often restrained the strength of those AIs)? If by micromanaging one gets superhuman tactical abilities, it was possible to do so 20 years ago as well by standard techniques of those times. And if these tactics weight heavily in the outcome, then I have doubts about the AlphaStar "understanding" of the "mechanics" or "strategy" of this game. That tech line, economy, army, the balance, all that strategic thinking.Joost Buijs wrote: ↑Mon Jan 28, 2019 1:34 pm Interesting analysis:

https://medium.com/@aleksipietikinen/an ... 02fb8344d6

I didn't read anything of AlphaStar aside this link. I understood it correctly that it uses supervised learning from millions of human games? How "zero", reinforcement learning works here? I understand that it is a famous game played online by many millions, and millions of games are stored. So both the fame of beating the best humans and the databases are tempting for DeepMind to use it as a benchmark.

But I would be curious about real strategy turn-based, hex-based games like "Korsun Pocket" (2003) AI. It involves logistics, supply, and a strategic about 75 x 50 hexes real-world battle-map, with all the quirks of the terrain (affecting supply and logistics too) and so on. Being turn-based, if the game is at say 2 hours per turn (some are playing it online at 1 day per turn, but just for convenience), then a good human will have no problems with "micromanagement", which is anyway not a big deal in this game. The important decisions are more at operational level, even if good tactics matters, but good small tactics has clear limits and it's learnt quickly by good humans (and nobody pressures you to do some number of clicks per minute). Here is about 1/6 of the total map of this game which I just started at turn 1 (totally there are 48 turns):

Here is the strategic map of frontline (the previously seen part is in the right a bit lower part rectangle):

The idea is the Soviets want to envelop and encircle from the left and right shoulders the mass of German army between the Dnepr river (upper blue curved thick line) at the middle-lower part of the map. Germans try to hold the flanks, bring brigades, divisions from lower and/or upper part of the map, reinforcements arriving from bottom of the map to shoulders or the neck of the attempted encirclement, and at best (for Germans) the outcome is that the encirclement is thwarted with not much ground lost, at worst the mass of Germans are completely sealed off and annihilated in the pocket. The Victory Points are awarded according to military losses, territory held, towns and road/rail junctions held. The game is very balanced between two good humans according to scoring the game uses. As it happened in early 1944, Soviets almost managed to seal off the pocket, but a tenuous neck still held, through which some 40% of the Germans escaped, abandoning their equipment. The victory in this game is on the borders of this outcome.

Here the operational level, broader command tactical level (not stupid micromanagement and individual units), even the intuition about supply network (by just glancing on the map) are much more important than pure small tactics, and human intuition is much more pronounced (again, by glancing visually at road/rail network, terrain difficulty, state of your units, etc) than in say Go, and the map is also much larger than in Go and very diversified (still in comprehensible and intuitive manner to a human).

Sure, DeepMind won't bother with such "fringe" games played by maybe dozens of thousands only, with no large databases and so on, but if I see AI literally having intuitions about the whole map, the terrain, the supply and logistics net, the probable reinforcements, the probable weather, the army parts, the main thrusts of attack and defense, it would mean much more to me than what AI does in Starcraft 2. Although this game doesn't have the tech and economy building.

-

Werewolf

- Posts: 1795

- Joined: Thu Sep 18, 2008 10:24 pm

Re: deepmind's alphastar beats pros in starcraft ii

Quite a few points here, but here goes: SC2 does have most of what you describe above. It's much more than just "micro". The A.I built in is far better than the 1990's C&C / EA Games AI on RTS games. SC2 is difficult both tactically and strategically.Laskos wrote: ↑Tue Jan 29, 2019 9:15 am

I don't know this game, but it seems not very adequate to check for AI capabilities. Did they have a decent, hardcoded, usual from 1990s AI for this game before AlphaStar? How did it fare at its best unrestrained strength (they often restrained the strength of those AIs)? If by micromanaging one gets superhuman tactical abilities, it was possible to do so 20 years ago as well by standard techniques of those times. And if these tactics weight heavily in the outcome, then I have doubts about the AlphaStar "understanding" of the "mechanics" or "strategy" of this game. That tech line, economy, army, the balance, all that strategic thinking.

I didn't read anything of AlphaStar aside this link. I understood it correctly that it uses supervised learning from millions of human games? How "zero", reinforcement learning works here? I understand that it is a famous game played online by many millions, and millions of games are stored. So both the fame of beating the best humans and the databases are tempting for DeepMind to use it as a benchmark.

Sure, DeepMind won't bother with such "fringe" games played by maybe dozens of thousands only, with no large databases and so on, but if I see AI literally having intuitions about the whole map, the terrain, the supply and logistics net, the probable reinforcements, the probable weather, the army parts, the main thrusts of attack and defense, it would mean much more to me than what AI does in Starcraft 2. Although this game doesn't have the tech and economy building.

But the AI built in loses to a good human player in both micro and, especially, strategy. In particular it doesn't understand choke points.

However, having said all that I would prefer it if the game was less "click heavy" for want of putting it.

-

Ozymandias

- Posts: 1532

- Joined: Sun Oct 25, 2009 2:30 am

Re: deepmind's alphastar beats pros in starcraft ii

The main problem I see with the built-in AI, is that it doesn't seem to learn. I always use the same strategy to beat it, and games rarely last more than a few minutes. Only when it randomly selects, among the different options, a suitable counter strategy, does it win. Human opponents can't be surprised twice exactly the same way (once you go a little bit up the ladder).

Turn based games are the best way to avoid "micro" being a requirement, but I don't know of any up to date one. MissionForce: CyberStorm was perfect, but quickly discontinued, is there anything like that available on the market today?

-

Werewolf

- Posts: 1795

- Joined: Thu Sep 18, 2008 10:24 pm

Re: deepmind's alphastar beats pros in starcraft ii

Civilisation? That had a very strong A.IOzymandias wrote: ↑Tue Jan 29, 2019 12:06 pmThe main problem I see with the built-in AI, is that it doesn't seem to learn. I always use the same strategy to beat it, and games rarely last more than a few minutes. Only when it randomly selects, among the different options, a suitable counter strategy, does it win. Human opponents can't be surprised twice exactly the same way (once you go a little bit up the ladder).

Turn based games are the best way to avoid "micro" being a requirement, but I don't know of any up to date one. MissionForce: CyberStorm was perfect, but quickly discontinued, is there anything like that available on the market today?

Can you win in a few minutes in SC2 against an elite AI with a random build order? That's very impressive if you can. The fastest I've done it in is 13 minutes but I tend not to do rush builds.

-

Laskos

- Posts: 10948

- Joined: Wed Jul 26, 2006 10:21 pm

- Full name: Kai Laskos

Re: deepmind's alphastar beats pros in starcraft ii

In-built AI at its best can lose to humans in micro? Unit by unit-wise? Why is that, as micro here seems heavily dependent on click speed? That commentator posted a video from 2011 of how a regular simple bot (like those used since ages in RTS games) exploits superhuman micro on unit level:Werewolf wrote: ↑Tue Jan 29, 2019 10:14 amQuite a few points here, but here goes: SC2 does have most of what you describe above. It's much more than just "micro". The A.I built in is far better than the 1990's C&C / EA Games AI on RTS games. SC2 is difficult both tactically and strategically.Laskos wrote: ↑Tue Jan 29, 2019 9:15 am

I don't know this game, but it seems not very adequate to check for AI capabilities. Did they have a decent, hardcoded, usual from 1990s AI for this game before AlphaStar? How did it fare at its best unrestrained strength (they often restrained the strength of those AIs)? If by micromanaging one gets superhuman tactical abilities, it was possible to do so 20 years ago as well by standard techniques of those times. And if these tactics weight heavily in the outcome, then I have doubts about the AlphaStar "understanding" of the "mechanics" or "strategy" of this game. That tech line, economy, army, the balance, all that strategic thinking.

I didn't read anything of AlphaStar aside this link. I understood it correctly that it uses supervised learning from millions of human games? How "zero", reinforcement learning works here? I understand that it is a famous game played online by many millions, and millions of games are stored. So both the fame of beating the best humans and the databases are tempting for DeepMind to use it as a benchmark.

Sure, DeepMind won't bother with such "fringe" games played by maybe dozens of thousands only, with no large databases and so on, but if I see AI literally having intuitions about the whole map, the terrain, the supply and logistics net, the probable reinforcements, the probable weather, the army parts, the main thrusts of attack and defense, it would mean much more to me than what AI does in Starcraft 2. Although this game doesn't have the tech and economy building.

But the AI built in loses to a good human player in both micro and, especially, strategy. In particular it doesn't understand choke points.

However, having said all that I would prefer it if the game was less "click heavy" for want of putting it.

100 zerglings vs 20 sieged tanks should be a meat grinder... but when the lings are controlled by the Automaton micro bot, the outcome changes

https://www.youtube.com/watch?v=IKVFZ28ybQs

This is a serious issue. Strategy can be short-cutted by micro superhuman exploits. They are not even bound to be accomplished most of the time, just when needed. And that AlphaStar CAN use the exploit is probably visible here:

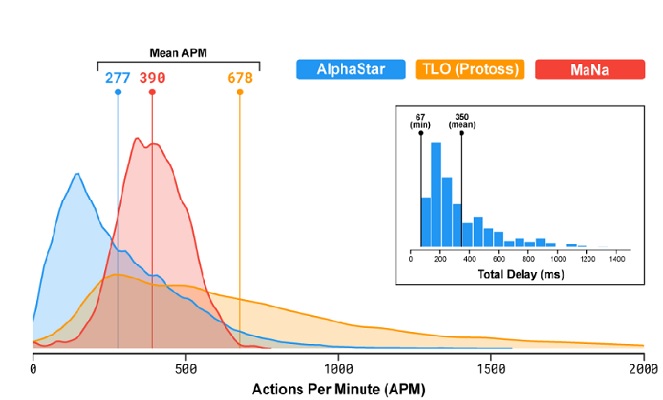

These are APM, not EPM (useful clicks). Take a top player like MaNa. For a regular top player like him, about half of the clicks are spam clicks and are useless, especially at very high APM. AlphaStar probably has very few useless clicks. So, in this plot, to see EPM, you have to constrict the MaNa APM to half of that red plot, and even less than half on the right tail of his APM, where there are a lot of spam clicks. Comparing real plots, AlphaStar has an insanely long tail in EPM, often capitalizing in inhuman speeds. Even the average EPM of Mana is probably about 200 compared to 277 of AlhpaStar. But it is this long tail which is devastating, because it is not negligible at all in "mass" when MaNa speeds are divided by two (and by more than two on the tail). Basically, probably whenever it is necessary, AlphaStar uses inhuman micro. This is an exploit, and might be a shortcut to the whole game goal. I am not sure AlphaStar is really proficient in tech line, economy and army balances of human nature, where it would be the most interesting to see its feats.

Anyway, I am not familiar with the game, but the argument I read sounded pretty convincing. Also, why pick a game allowing for simple inhuman exploits, doable by a simple bot from 2011? Yes, I understand, notoriety, popularity and databases, but I would think of a game not needing AI restrictions, like the one excellent turn-based I presented. Longish turns, where micro for top players is almost irrelevant and comes almost automatically, without any need of fast clicks. No need to restrict AI in any way. Maybe DeepMind AI can find an exploit even in this game, an imbalance, but it would be of a totally different kind.

As for recent incarnations of hex-based, turn-based, operational level wargames, TOAW IV of 2017 is very good and quite popular (well, not that huge popularity of Starcraft and Civilization, sure). Has numerous very good scenarios and a large fanbase, many of whom are contributing with scenarios and bug-detections.

-

smatovic

- Posts: 2642

- Joined: Wed Mar 10, 2010 10:18 pm

- Location: Hamburg, Germany

- Full name: Srdja Matovic

Re: deepmind's alphastar beats pros in starcraft ii

They cracked Go, Shogi, Chess and now StarCraft.Laskos wrote: ↑Tue Jan 29, 2019 9:15 am

Anyway, I am not familiar with the game, but the argument I read sounded pretty convincing. Also, why pick a game allowing for simple inhuman exploits, doable by a simple bot from 2011? Yes, I understand, notoriety, popularity and databases, but I would think of a game not needing AI restrictions, like the one excellent turn-based I presented. Longish turns, where micro for top players is almost irrelevant and comes almost automatically, without any need of fast clicks. No need to restrict AI in any way. Maybe DeepMind AI can find an exploit even in this game, an imbalance, but it would be of a totally different kind.

I think such a round based strategy game you suggest would be no challenge for Deepmind....

AlphaStar has 200 years of accumulated StarCraft play,

with an round based strategy game, even a complex one,

they could train their agents with thousands of years,

and develop strategies humans did not come up with.

Myself would not be impressed by any round based strategy game Deepmind is able to crack,

but RTS with StarCraft was another kind of challenge.

btw: did the human players had any hardware advantage compared to AlphaStar?

--

Srdja

-

Laskos

- Posts: 10948

- Joined: Wed Jul 26, 2006 10:21 pm

- Full name: Kai Laskos

Re: deepmind's alphastar beats pros in starcraft ii

I am not sure a plethora of such basic minobots like Automaton from 2011 wouldn't have beaten any human in Starcraft without any fancy ML techniques. These things are usually called "cheats" in these games AFAIK. I am reserved in being impressed, especially with the holistic strategy level of AlphaStar.smatovic wrote: ↑Tue Jan 29, 2019 5:09 pmThey cracked Go, Shogi, Chess and now StarCraft.Laskos wrote: ↑Tue Jan 29, 2019 9:15 am

Anyway, I am not familiar with the game, but the argument I read sounded pretty convincing. Also, why pick a game allowing for simple inhuman exploits, doable by a simple bot from 2011? Yes, I understand, notoriety, popularity and databases, but I would think of a game not needing AI restrictions, like the one excellent turn-based I presented. Longish turns, where micro for top players is almost irrelevant and comes almost automatically, without any need of fast clicks. No need to restrict AI in any way. Maybe DeepMind AI can find an exploit even in this game, an imbalance, but it would be of a totally different kind.

I think such a round based strategy game you suggest would be no challenge for Deepmind....

AlphaStar has 200 years of accumulated StarCraft play,

with an round based strategy game, even a complex one,

they could train their agents with thousands of years,

and develop strategies humans did not come up with.

Myself would not be impressed by any round based strategy game Deepmind is able to crack,

but RTS with StarCraft was another kind of challenge.

btw: did the human players had any hardware advantage compared to AlphaStar?

--

Srdja

OTOH, I am not sure my proposed turn-based strategy games are any easier than Go against top players of those games. I think the level of humans, if intensively trained from young age on these games, can be hard to achieve for a ML AI. Not sure, right, the games may have other undiscovered by humans "cheats", but of completely different kind. Sure not solvable by those minibots kind.

These are for a novice like me more intuitive and more real-life games than Go, but of high complexity at all levels of abstraction and strategy/tactics, with no advantage of classical bots in tactics.

-

Ozymandias

- Posts: 1532

- Joined: Sun Oct 25, 2009 2:30 am

Re: deepmind's alphastar beats pros in starcraft ii

Me neither, I like to keep my guys alive, and in Blizzard games, the best way to achieve that is to go aerial. In WCIII I used Gryphons and in SC2 I go for Banshees, which are even better because of the stealth mode. If the game is well played, you don't lose a single unit, the problem is that other than closing the door, I don't do anything to defend the base, which means that when the AI rushes, I'm done for. What irks me, is that even though the computer knows I have nobody manning the base (it always sends a drone to spy on you), it doesn't attack unless it has randomly chosen the appropriate build. One has to wonder what spying does for it anyway.

It's also surprising that in all this time since WCIII, they haven't fixed the AI to avoid losing to such a simple strategy. Honest, it's nothing special, unlike what I used to do in Dune II: Battle for Arrakis, where I was able to complete any mission in under 20 minutes. Try that in Ordos mission 9.