However, when you break a "rule" you suffer the consequences. And in this case there _is_ a consequence in terms of Elo. You can reach a search deep enough where you _never_ replace anything and your search hits a wall, while a more correct implementation will not do that...Chan Rasjid wrote:I think the 'NEVER' rule applies only to some 'standard' replacement schemes; in chess programming every other person is trying out all manner of tricks; my replacement scheme is this, in order of priority:bob wrote: ...

Here is some important advice that quite a few overlook: "NEVER, and I do mean NEVER fail to store a current position in the hash table. Let me repeat, NEVER. You can do whatever you want to choose what to replace, but you MUST replace something.

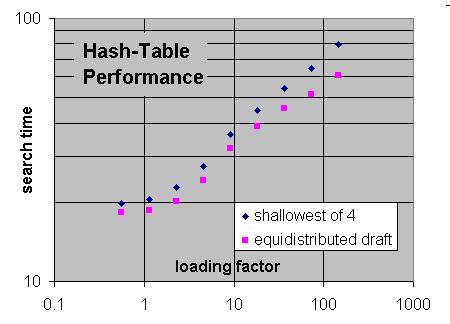

That seems counter-intuitive, but it is critical. I use a bucket of 4 entries and choose the best of the 4 (actually the worst, but you get my drift) to replace, and I always store the entry from a fail-high, a fail-low, or an EXACT search.

1) 'effective depth' - this is a combination of depth + age, to try to retain some old_high_depth positions if they are not too old; age =16 at start of game; at rootsearch(), age += 1 + !followPV; for every game move that moves a pawn, age+= const; for nullmove age -=const; etc

2) type - in order of priority exact, fail-low, fail high;

3) age == search sequence number.

With my scheme, ageing is by : depth | type | age.

All the four slots might have positions better than the current node in which case nothing is stored - the 'NEVER' rule is broken.

In Crafty I make multiple passes in HashStore().

1. Look for an exact signature match, overwrite if found regardless of draft or anything else.

2. Look for an "old" entry using the "age" trick I've used forever... I replace an entry with wrong age. If there are several, I replace the one with wrong age _and_ lowest draft.

3. I replace the entry with the lowest draft, period.

There is no "type priority" in crafty. replace entry from previous search, or replace entry from current search with lowest draft, all in a bucket of 4. I tried several dozen alternatives before settling on this after lots of testing...

I don't understand why your type priority is FH/FL/EX. The main idea of transposition table is to store the 'most-helpful' positions; it is for this reason that higher depth has preference as it saves the most time. For type, exact is sure to cause a return (unless a scheme does not cutoff on pv); fail-low searches all nodes and is expensive to do, fail-high might just have searched 1/2 moves.

Rasjid.