In regards to the analysis that Lichess does, they are open-sourced, so you would be able to review their code to try to understand a bit more of how they have implemented this. In particular, it looks like they have this implemented in the

Fishnet repository (which is an application that people can install on their computer to donate compute resources to Lichess).

In particular, it looks like Lichess will break down an analysis request into the list of positions that occurred in the game. It will then iterate over these positions in reverse order, using Stockfish to analyze the position. Prior to sending the go command, it will ensure that the UCI_AnalyseMode option is set to true on the engine and that the skill level is set to 20. It also appears that the search is restricted for each position by node count, with a different limit depending on whether the HCE or NNUE version of Stockfish is being used. It looks like the Lichess server dictates these limits that are used, but according to the Protocol documentation, it appears that Stockfish 15 is limited to 1,500,000 nodes, Stockfish 14 to 2,100,000 nodes, and classical (or HCE) to 4,050,000. But it also indicates 7,000ms per ply (though I'm not sure that this is accurate to the current code).

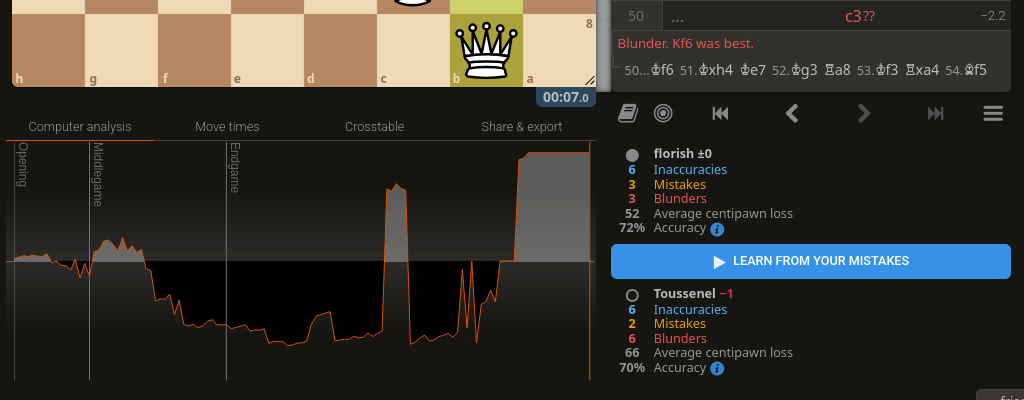

In regards to the analysis of each move that was played compared to what the engine likes,

Lichess also has this article explaining their accuracy calculation.

I'm not wholly familiar with how the Fishnet code works, but it seems that the basic idea is to split out the game into each position, and then iterate over them from the end to the beginning. For each of these positions, conduct a limited search to identify the best move and the evaluation. Then you can collect this information against what was actually played and identify the moves that had the most significant deviation from the engine's best play.